Our Paper "Self-Supervised Multi-Task Pretraining Improves Image Aesthetic Assessment" got accepted

26.04.2021Our paper "Self-Supervised Multi-Task Pretraining Improves Image Aesthetic Assessment" got accepted for publication at "New Trends in Image Restoration and Enhancement workshop" (NTIRE) at CVPR 2021.

Assessing the aesthetics of an image automatically can be used to choose the most aesthetic images, sort image collections, or optimize image editing filter parameters. Modern Image Aesthetic Assessment methods are based on Convolutional Neural Networks (CNNs) that receive an image as input and output a score that is higher for more aesthetic images. Such models are usually initialized with weights trained on the ImageNet classification task to build on the already learned features by fine-tuning the network on a labeled dataset such as AVA. We argue that the ImageNet classification task is not well-suited for Image Aesthetic Assessment models, since it is not designed to teach the network aesthetically relevant features.

Therefore in this paper we propose a novel self-supervised multi-task pretraining method explicitly optimized for Image Aesthetic Assessment. Additionally we introduce a dataset of high-quality images to perform the pretraining on - and to be repurposed for other image tasks in the future.

Abstract:

Neural networks for Image Aesthetic Assessment are usually initialized with weights of pretrained ImageNet models and then trained using a labeled image aesthetics dataset. We argue that the ImageNet classification task is not well-suited for pretraining, since content based classification is designed to make the model invariant to features that strongly influence the image's aesthetics, e.g. style-based features such as brightness or contrast.

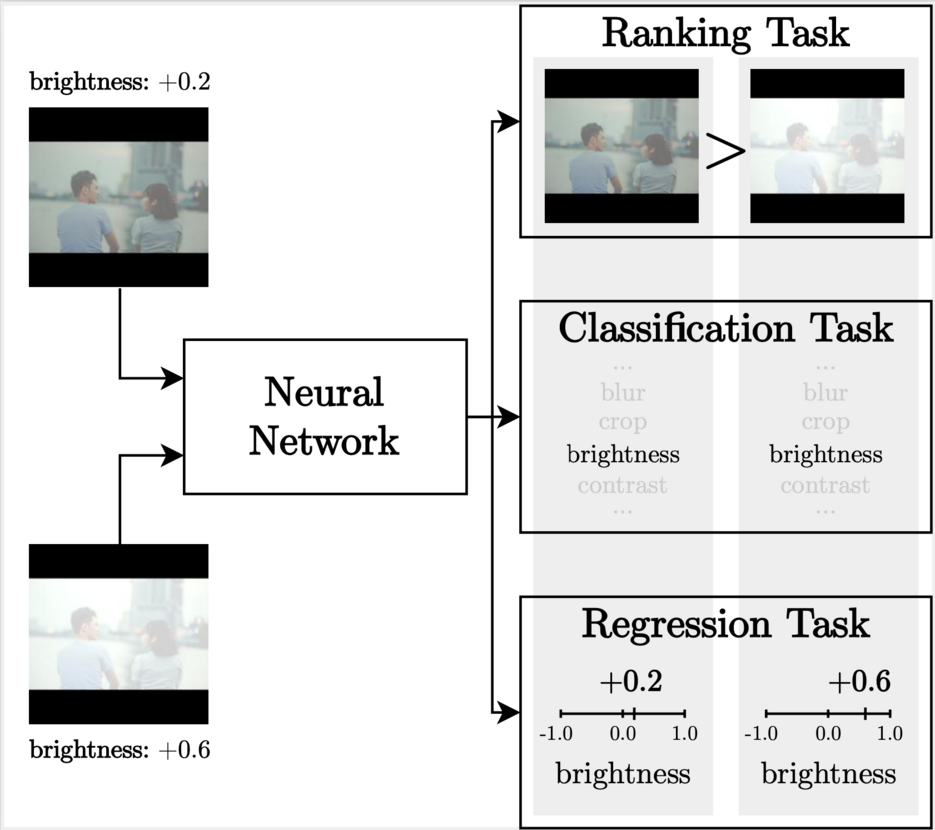

We propose to use self-supervised aesthetic-aware pretext tasks that let the network learn aesthetically relevant features, based on the observation that distorting aesthetic images with image filters usually reduces their appeal. To ensure that images are not accidentally improved when filters are applied, we introduce a large dataset comprised of highly aesthetic images as the starting point for the distortions. The network is then trained to rank less distorted images higher than their more distorted counterparts. To exploit effects of multiple different objectives, we also embed this task into a multi-task setting by adding either a self-supervised classification or regression task. In our experiments, we show that our pretraining improves performance over the ImageNet initialization and reduces the number of epochs until convergence by up to 47%. Additionally, we can match the performance of an ImageNet-initialized model while reducing the labeled training data by 20%.

We make our code, data, and pretrained models available.