Multilingual Natural Language Processing

Basic information

Lecturer: Prof. Dr. Goran Glavaš

Teaching Assistants: Benedikt Ebing, Fabian David Schmidt

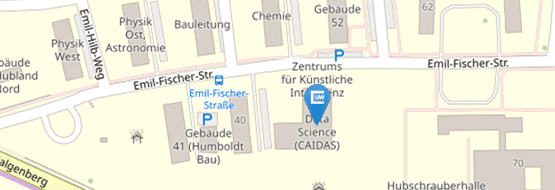

Lecture: Wednesday 10:00 - 12:00 in Übungsraum I (Informatik)

Execises: Friday 12:00 - 14:00 in Übungsraum I (Informatik)

Kickoff: 26.4.2023

Intended audience: The course is recommended for master students of all CS-oriented programs (Master Informatik, Master eXtended AI, Master Business Informatics). Prior knowledge of core NLP and machine learning concepts is desirable, albeit not mandatory.

Registration

It is not necessary to register for the lecture or exercises.

In order to get access to teaching materials and current announcements you need to register for WueCampus course

To participate in the exam you must register for the exam via WueStudy.

All further information on this can be found in our WueCampus course

Learning outcomes

Students will acquire theoretical and practical knowledge on modern multilingual natural language processing and also get an insight into cutting edge research in (multilingual) NLP. They will learn how to represent texts from different languages in shared representation spaces that enable semantic comparison and cross-lingual transfer for various NLP tasks. Upon successful completion of the course, the students will be well-equipped to solve practical NLP problems regardless of the language of the text data, and to determine the optimal strategy to obtain best performance for any concrete target language.

Schedule (tentative)

Introduction

26.4. L1: Languages of the world & Linguistic Universals; Course organization

Block I: Fundamentals

10.5. L2: Language modeling, word embedding models, tokenization & vocabulary

17.5. L3: Deep Learning for (Modern) NLP — Perceptron/MLP, Backprop, Batching, Gradient Descent, Dropout…

24.5. L4: Transformer Almighty & Pretraining Language Models (autoregressive, masked language modeling)

Block II: Multilinguality

31.5. L5: Multilingual Word Embedding Spaces (and cross-lingual transfer using them)

7.6. L6: Multilingual LMs (and cross-lingual transfer using them); Tasks, Benchmarks & Evaluation

14.6. L7: Curse of Multilinguality, Modularization, and Language Adaptation

21.6. L8: Transfer for Token-Level Tasks: Word Alignment & Label Projection

Block III: Advanced

28.6. L9: Neural Machine Translation

5.7. L10: Multilingual Sentence Representations

12.7. L11: Prompting and Large Language Models (LLMs); Instruction Fine-Tuning, ChatGPT/GPT-4