Research Activities

The Chair of Space Informatics and Satellite Systems' (SISAT) research portfolio is built upon two complementary pillars: Space Informatics, understood as the application of advanced, model-based and data-driven methods from computer science to achieve autonomy in space systems, and Satellite Systems, with a focus on the development and analysis of resource-efficient technologies for spacecraft, mission systems, and distributed mission architectures.

This foundation enables research focused on advanced Guidance, Navigation & Control (GNC) for autonomous and distributed space systems, with applications in proximity operations, formation flying, rendezvous and docking, and space sustainability - ensuring safe, long-term use of near-Earth space through debris mitigation and collision avoidance (supporting SSA/SDA)

The core methodology combines data-driven techniques (e.g., Reinforcement Learning, Deep Learning) with model-based approaches (e.g., Relative Orbital Elements, Dual Quaternions) tailored specifically to space GNC tasks under realistic constraints - emphasizing practical application over novel AI development.

The SISAT research portfolio (Fig. 2) operationalizes this vision through three interconnected domains: Algorithms (for GNC/SSA), Modeling (analytical and data-driven dynamics), and Components (resource-efficient hardware). Data-driven methods are used to extend and support model-based solutions, all aligned with mission-specific requirements.

Guidance, Navigation and Control

Our team specializes in the development of distributed small satellite systems, comprising coordinated and autonomous spacecraft that operate collaboratively. We design advanced models, components, and algorithms to enhance the Guidance, Navigation, and Control (GNC) capabilities of such formations. A core focus lies in enabling autonomous rendezvous and docking (RVD) operations, precisely locating and physically connecting with target objects in space. Furthermore, we are actively exploring the integration of artificial intelligence (AI) technologies to increase autonomy, resilience, and efficiency in these complex mission scenarios. This includes motion planning, AI-based filtering and pose estimation, as well as guidance for low-thrust and propellantless control.

Vision-based Navigation

We are advancing vision-based navigation (VBN) systems through machine learning models capable of extracting spatial features from target objects in real time. These models are designed to maintain high accuracy under challenging operational conditions including occlusions, varying lighting, and dynamic viewpoints.

To ensure generalization and robustness in proximity operations, we employ a multi-source training approach that combines synthetic, lab-generated, and real-world imagery. This strategy effectively bridges the domain gap, enabling the AI to learn invariant representations across diverse visual environments. The resulting AI-enhanced navigation frameworks are poised to deliver significant improvements in adaptability, reliability, and performance for close-range autonomous missions.

Adhesive surfaces coated with Gecko materials

Our concept utilizes satellites with docking surfaces coated with gecko-inspired materials - structured silicone adhesives that enable passive, reversible adhesion upon gentle contact, mimicking the natural grip of a gecko.

These materials offer significant advantages: they are cost-effective, easy to produce, and operate without electrical power. This research is supported by a collaboration between JMU, TU Berlin, and the Leibniz Institute for New Materials in Saarbrücken for material development and application.

The technology will be validated on the International Space Station (ISS) in late 2025 through an autonomous docking simulation under real space conditions. The experiment will employ two NASA Astrobee robots. These are free-floating cubes used for onboard assistance. One will act as a non-cooperative target, while the other, equipped with the gecko-based docking mechanism and tailored algorithms, will perform the autonomous rendezvous and capture (see RAGGA project).

Projects

RAGGA

The RAGGA project aims to develop a complete Guidance, Navigation, and Control (GNC) system to enable the capture of non-cooperative, tumbling space debris, which is essential for sustainable space operations. Its core methodology integrates a vision-based navigation system, using a monocular camera and combining AI with traditional algorithms, to estimate a target's position and attitude. For guidance, it employs a Bézier-curve-based motion planner to compute a safe, synchronized approach trajectory based on dual quaternion mathematics across all GNC components for unified and efficient state representation. The project is part of the joint RAGGA-LIZARD initiative with TU Berlin, with its ultimate goal being technology demonstration aboard the International Space Station.

IMPOSTER

The IMPOSTER (rendering IMages for oPtical navigatiOn toSupport arTificial intElligence tRaining) project develops a framework for generating highly realistic synthetic image data to train AI models for visual navigation in space missions. The sandbox software aims towards an AI-based, explainable, and uncertainty-aware pose estimation algorithm in collaboration with The Exploration Company. This enables navigation toward non-cooperative objects like space debris, supporting satellite servicing and removal missions. It also features a modular architecture with interchangeable target objects and camera models, enabling parametric scene modifications. It optimizes rendering through CAD-to-3D conversion, balances detail with realism, replicates lighting and materials for high-fidelity output, and supports AI applications with reliable training data and automatic labeling.

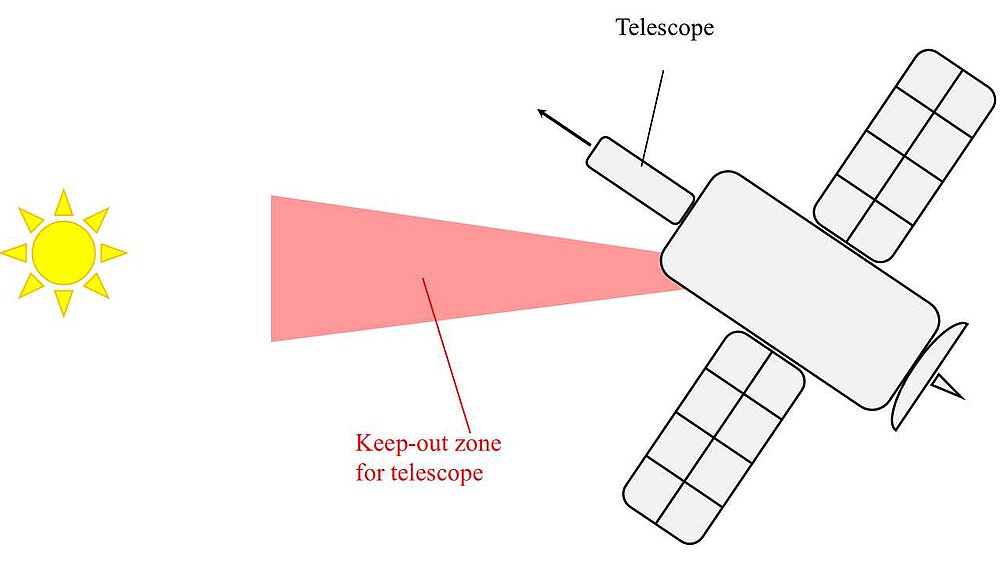

SPARTAN

The SPARTAN (Spacecraft Attitude Reorientation and Tasking with Reinforcement Learning) project, funded by JMU Seed Grant, aims to implement deep reinforcement learning (DRL) algorithms for spacecraft attitude control. The project's main focus is handling pointing keep-out constraints during reorientation maneuvers in order to ensure sensitive onboard instruments (e.g., optical cameras) avoid pointing to bright celestial objects such as the Sun.