About the robotics group

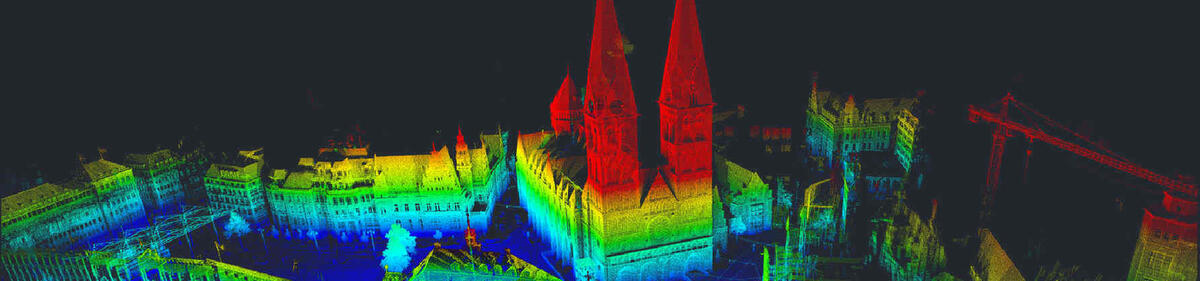

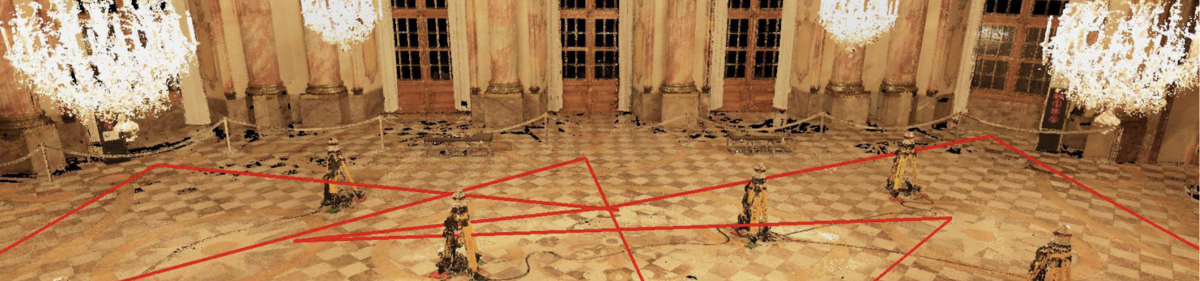

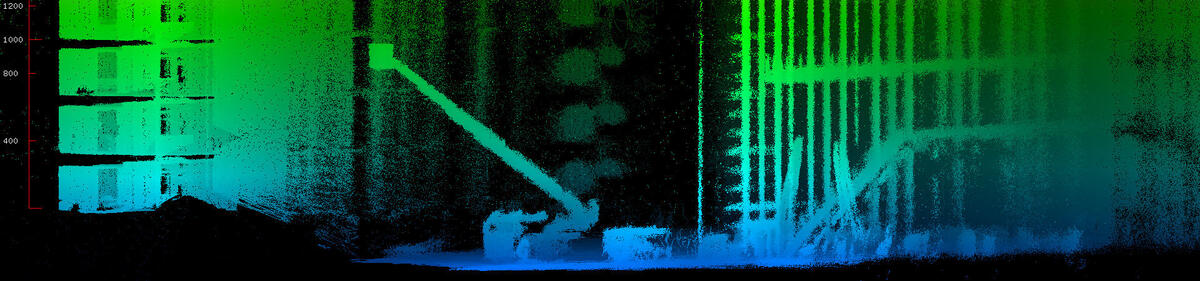

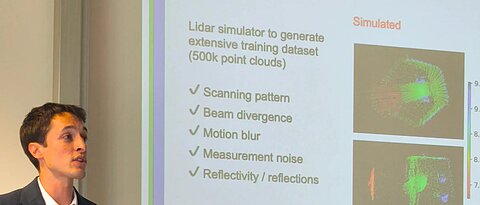

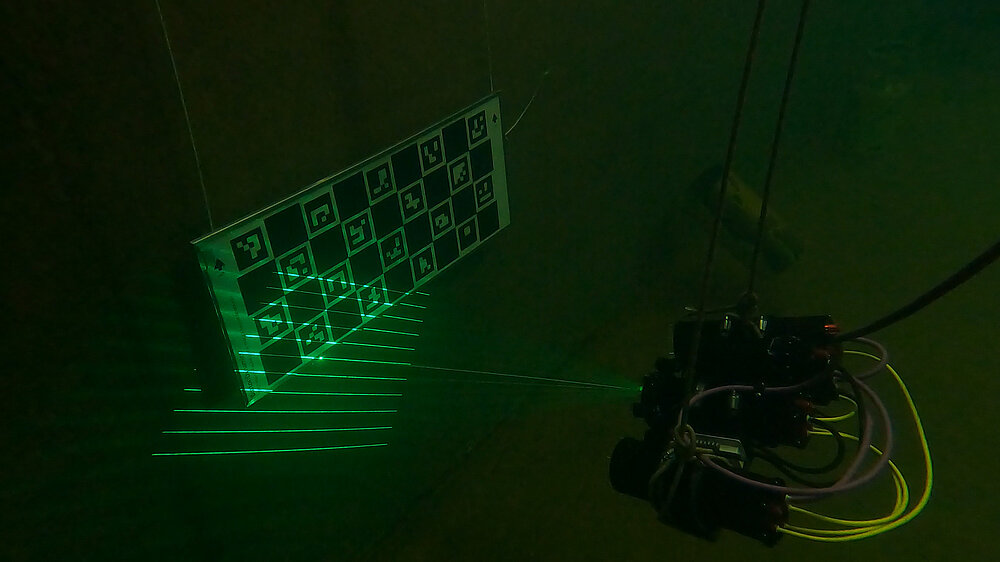

The department deals with robotics and automation, cognitive systems and artificial intelligence. Research topics include in particular the 3D capture of surfaces, shapes and environments using 3D laser scanners and cameras. Quite a bit of work has been and is being done in the context of SLAM (simultaneous localization and mapping) - SLAM is a robotics technique that requires a mobile system to simultaneously create a map of its environment and estimate its spatial location within that map. It is fundamental for autonomy of mobile robots. The chair also deals with high accuracy optical measurement methods and algorithms for surface reconstruction. 3D sensor data are not exclusively acquired by mobile robots. In recent years, applications to manipulators have been frequently investigated. Here, there is less demand for a solution of SLAM, but rather high-precision calibrations are required to exhaust robot specifications, for example, to perform accurate 3D printing with industrial manipulators.

News

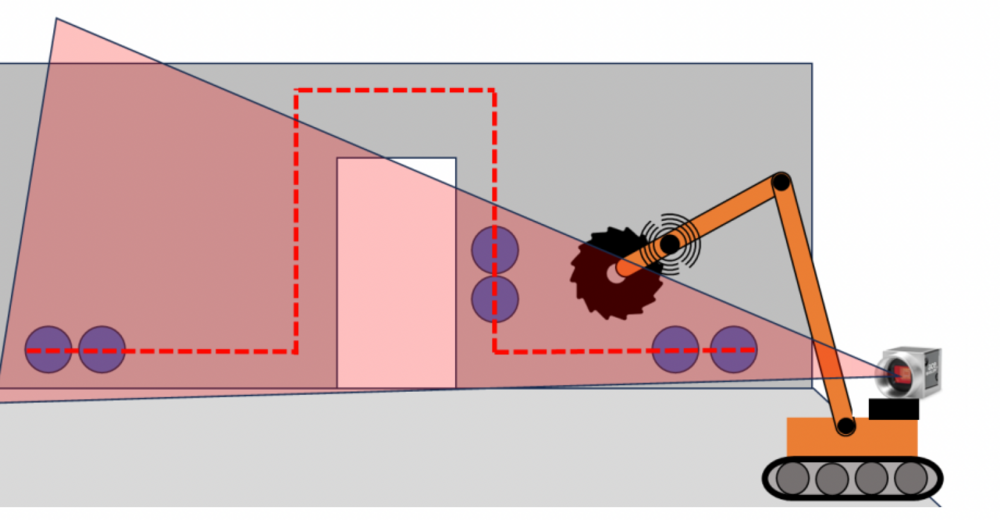

ElRobot

Development of an automated and modular electrician's robot for creating precise cable channels for electrical installations based on DIN 18015

MultiUWS

We intend to develop a novel approach for geometric reconstruction based on implicit neural representations. In particular, AI-based algorithms are to be developed to increase the resolution of depth images and fill gaps in individual scans

DigitalPV

Through the «digitalPV» network, 15 partners from industry and academia are collaborating to develop innovative solutions to these challenges. The project is funded by the Federal Ministry for Economic Affairs and Climate Action.