Knowledge Distillation for global Ecosystem Models

01.01.20241 million. 10 million. 1 billion. 500 billion? Large models are becoming increasingly larger. As their parameter count increases, so do their capabilities.

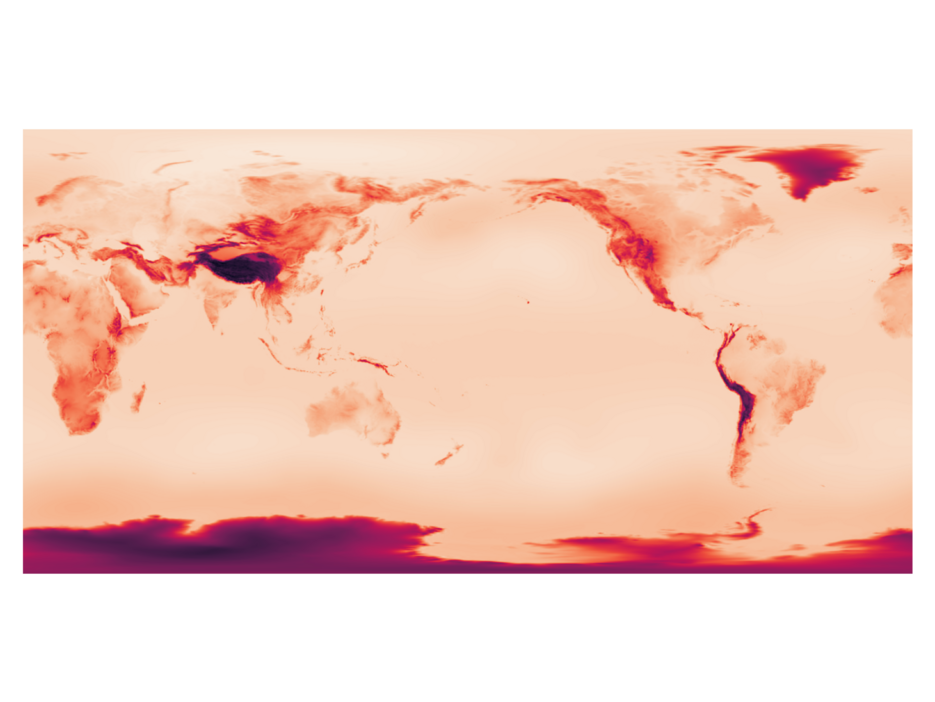

Current generation atmospheric models are huge, with parameters ranging into the billions. However, this capacity also unlocks interesting capabilities, such as versatility in using them for novel tasks.

Researchers can build upon publicly released weights to adapt the pre-trained model to novel tasks. For example, atmospheric weather models can also be used for modelling ground-related tasks.

Still, the resulting (already adapted) model might be huge -- thankfully, there's a way around: Knowledged Distillation, which transfers the knowledge from a large teacher model to a smaller student model.

In this work, you'll be applying the idea of distillation to ecosystem modelling. The goal is to shrink a ~100 million parameter model via distillation, while maintaining its perfomance for vegetation modelling.

Your first steps involve a throughout literature review for distilling regression models (e.g., models that don't output a classification label ["This is a cat"] but rather a large array of numbers). You'll then code the distillation pipeline for transferring the large teacher model's knowledge into a smaller counterpart. The results will then be compared to a similarly-sized second network which was trained from scratch (to check if distillation is beneficial here).

If you are adventorous, we can then expand the work into checking runtime statistics of the large versus the distilled models on a mini computer such as a Raspberry Pi device. Ideally, the smaller model runs faster, but produces the same high-quality output as its teacher.

Don't hesitate to contact me for further details; you are welcome to bring your own ideas to this topic!

Supervisor: Pascal Janetzky