Our paper "Feature relevance XAI in anomaly detection: reviewing approaches and challenges" has been accepted in the "Horizons in Artificial Intelligence" journal

01/17/2023In our work we review existing approaches that explain AI-based anomaly detectors by highlighting features relevant for their predictions.

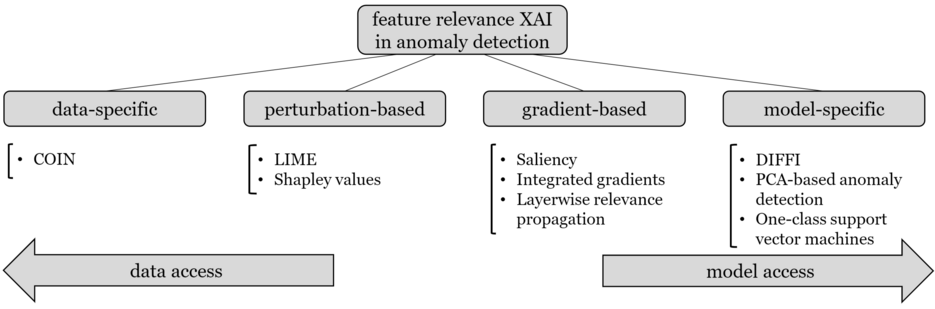

Our paper reviews explainable artificial intelligence (XAI) approaches that give feature relevance explanations for anomaly detectors. These XAI approaches explain a prediction made by AI-based anomaly detectors through highlighting relevant input features that lead the detector to its decision. We cover approaches of this popular research domain in detail, and demonstrate open challenges within the domain.

Abstract

With complexity of artificial intelligence systems increasing continuously in past years, studies to explain these complex systems have grown in popularity. While much work has focused on explaining artificial intelligence systems in popular domains such as classification and regression, explanations in the area of anomaly detection have only recently received increasing attention from researchers. In particular, explaining singular model decisions of a complex anomaly detector by highlighting which inputs were responsible for a decision, commonly referred to as local post-hoc feature relevance, has lately been studied by several authors. In this paper, we systematically structure these works based on their access to training data and the anomaly detection model, and provide a detailed overview of their operation in the anomaly detection domain. We demonstrate their performance and highlight their limitations in multiple experimental showcases, discussing current challenges and opportunities for future work in feature relevance XAI for anomaly detection.