Our paper "LM4KG: Improving Common Sense Knowledge Graphs with Language Models" has been presented at ISWC 2020

11/23/2020Our paper "LM4KG: Improving Common Sense Knowledge Graphs with Language Models" concerning the enrichment of knowledge graphs through a pre-trained language model was presented at the International Semantic Web Conference (ISWC) 2020.

Abstract

Language Models (LMs) and Knowledge Graphs (KGs) are both active research areas in Machine Learning and Semantic Web. While LMs have brought great improvements for many downstream tasks on their own, they are often combined with KGs providing additionally aggregated, well structured knowledge. Usually, this is done by leveraging KGs to improve LMs. But what happens if we turn this around and use LMs to improve KGs?

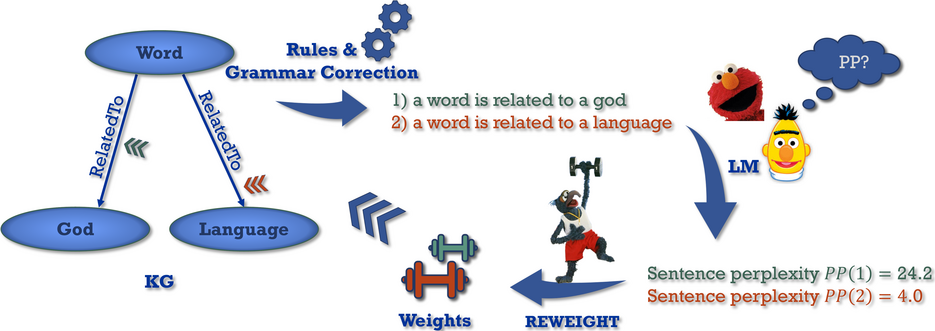

In this paper, we propose a method enabling the use of the knowledge inherently encoded in LMs to automatically improve explicit knowledge represented in common sense KGs. Edges in these KGs represent relations between concepts, but the strength of the relations is often not clear. We propose to transform KG relations to natural language sentences, allowing us to utilize the information contained in large LMs to rate these sentences through a new perplexity-based measure, Refined Edge WEIGHTing (REWEIGHT). We test our scoring scheme REWEIGHT on the popular LM BERT to produce new weights for the edges in the well-known ConceptNet KG. By retrofitting existing word embeddings to our modified ConceptNet, we create ConceptNet NumBERTbatch embeddings and show that these outperform the original ConceptNet Numberbatch on multiple established semantic similarity datasets.

You can find the paper on Bibsonomy and the video of our presentation on our Data Science YouTube channel.