Our Paper "Generative Inpainting for Shapley-Value-Based Anomaly Explanation" was accepted at xAI 2024

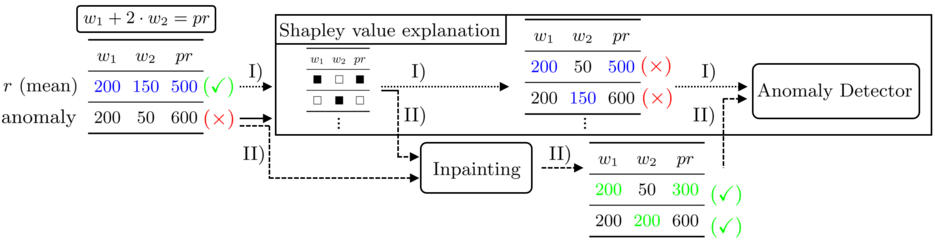

16.04.2024In our paper, we use generative diffusion models to aid a specific type of explainable AI methods, allowing them to better explain machine learning models that detect anomalous activities.

We are glad to be featured in the 2nd world conference on explainable Artificial Intelligence (xAI 2024), and look forward to presenting our most recent work in Malta. Our proposed work improves existing methods that provide explanations for fully trained machine learning models by adapting their parameter choices to the problem of anomaly detection.

Abstract:

Feature relevance explanations currently constitute the most used type of explanation in anomaly detection related tasks such as cyber security and fraud detection. Recent works have underscored the importance of optimizing hyperparameters of post-hoc explainers which show a large impact on the resulting explanation quality. In this work, we propose a new method to set the hyperparameter of replacement values within Shapley-value-based post-hoc explainers. Our method leverages ideas from the domain of generative image inpainting, where generative machine learning models are used to replace parts of a given input image. We show that these generative models can also be applied to tabular replacement value generation for Shapley-value-based feature relevance explainers. Experimentally, we train a denoising diffusion probabilistic model for generative inpainting on two tabular anomaly detection datasets from the domains of network intrusion detection and occupational fraud detection, and integrate the generative inpainting model into the SHAP explanation framework. We empirically show that generative inpainting may be used to achieve consistently strong explanation quality when explaining different anomaly detectors on tabular data.