XAI Benchmarking for Cyber Security

06.05.2025Investigate how to derive explanation-relevant features from simulated SIEM logs by analyzing known attack scenarios. Use publicly available annotated attack logs (e.g., from securitydatasets.com) to identify explanation-relevant patterns using data science methods. The goal is to build a feature annotation pipeline that supports future XAI evaluation in cyber security.

Objective:

Investigate how to derive explanation-relevant features from simulated SIEM logs by analyzing known attack scenarios. Use publicly available annotated attack logs (e.g., from securitydatasets.com) to identify explanation-relevant patterns using data science methods. The goal is to build a feature annotation pipeline that supports future XAI evaluation in cyber security.

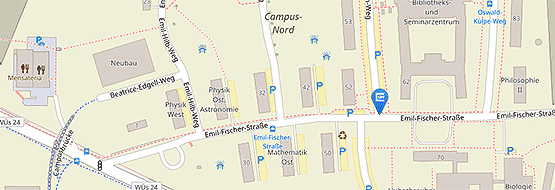

Betreuer: Daniel Schlör

Key Tasks:

- Select a small set of attack scenarios from securitydatasets.com (e.g., defense evasion, lateral movement) or other sources

- Load and preprocess JSON/EVTX/sysmon logs

- Analyze the logs to extract statistically or semantically relevant features (e.g., rare parent-child processes, registry writes, command line tokens)

- Use interpretable ML models (e.g., decision trees, rule learning) to derive human-understandable decision criteria

- Parse detection rules (e.g., from Sigma) to automatically extract key features referenced by SOC analysts and integrate in feature extraction pipeline

- Create an (active learning) annotation pipeline

- Annotate logs with candidate explanation features or tags

Extension Directions (Master Thesis / Practica):

- Semi-Automated Labeling Framework Using External Datasets

- Explainability Evaluation Benchmarks Using Public Logs

- Multi-Modal Knowledge Graph Construction